|

Hi, I am Zheyuan (Frank) Liu (刘哲源), a third year CS PhD Candidate studying at the University of Notre Dame, advised by Prof. Meng Jiang and affiliated with the DM2 lab. Before that, I received B.S in Computer Science and Applied Math at Brandeis University. My research interest lies in GenAI (e.g. MLLM/LLM/) Safety, AI Privacy, Trustworthy AI, Agentic Safety, Multi-Agent Collaboration.etc. Currently, I am exploring Agentic Safety, Multi-agents collaboration and VLA models. For my CV, please refer CV. Please feel free to drop me an Email for any form of communication or collaboration! I am also an active blog writer on Red Notes (小红书), feel free to find my account here ! Email / CV / Google Scholar / Semantic Scholar / X (Twitter) / Github / LinkedIn / Red Notes (小红书) |

|

|

|

|

| 2026 |

|

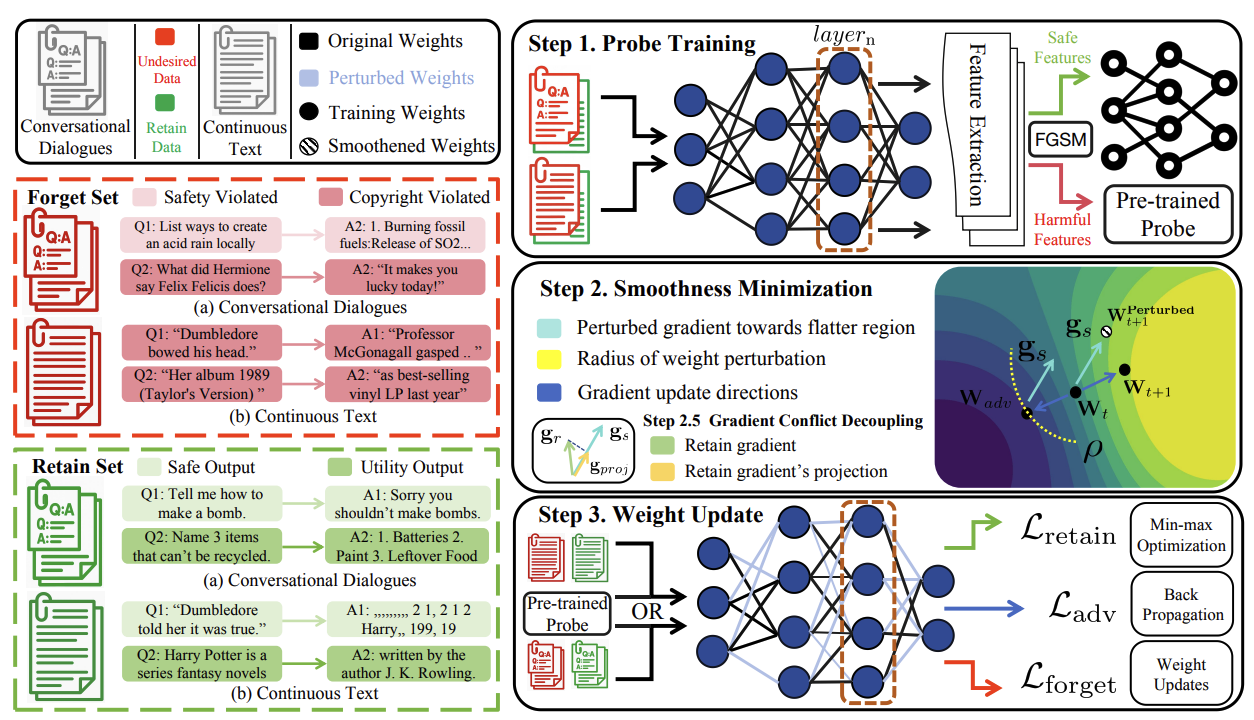

Han Yan*, Zheyuan Liu*, Meng Jiang ICLR 2026 We propose PRISM, a unified framework that enforces dual-space smoothness in representation and parameter spaces to improve robustness and balance unlearning metrics. PRISM consists of two smoothness optimization stages: (i) a representation space stage that employs a robustly trained probe to defend against jailbreak attacks, and (ii) a parameter-space stage that decouples retain-forget gradient conflicts, reduces imbalance, and smooths the parameter space to mitigate relearning attacks. |

|

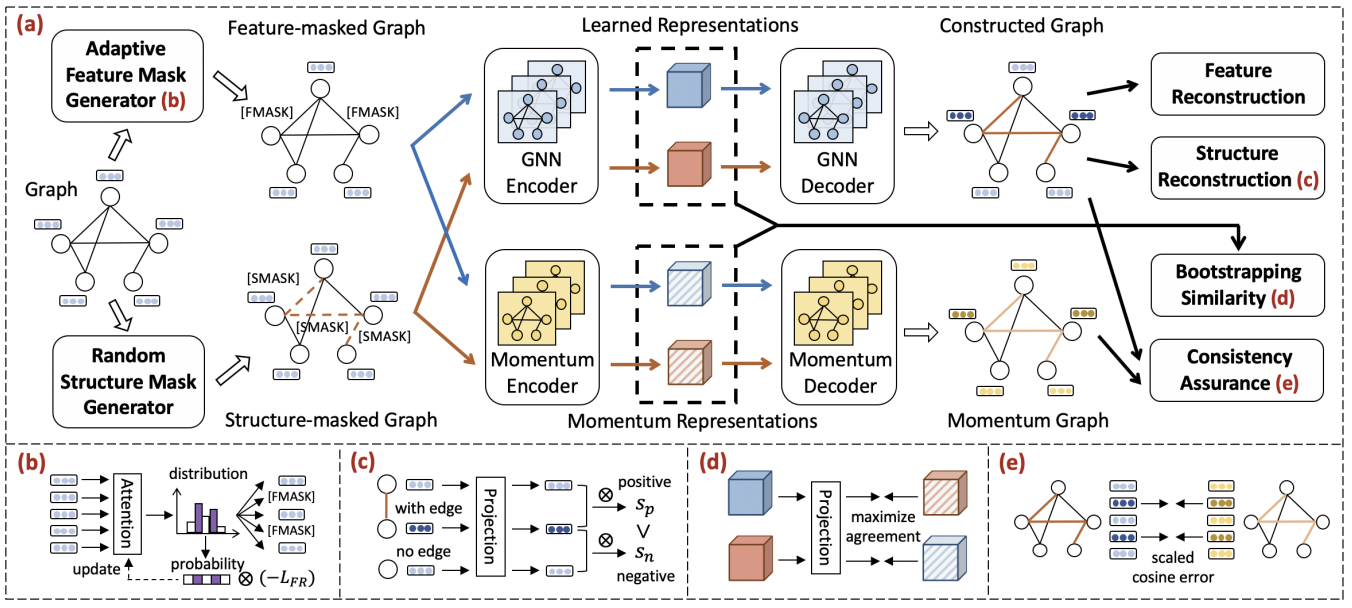

Yijun Tian, Chuxu Zhang, Ziyi Kou, Zheyuan Liu, Xiangliang Zhang, Nitesh V. Chawla AAAI 2026 we propose ACE-GSL, an adaptive and context-rich graph self-supervised learning framework to address these issues from the perspectives of adaptivity, integrity, complementarity, and consistency. |

|

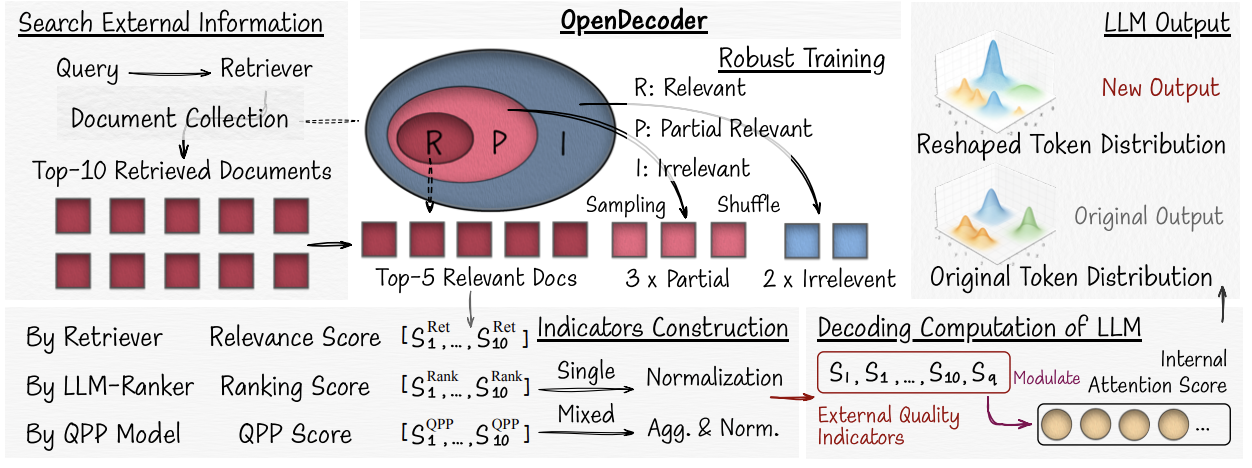

Fengran Mo, Zhan Su, Yuchen Hui, Jinghan Zhang, Jia Ao Sun, Zheyuan Liu, Chao Zhang, Tetsuya Sakai, Jian-Yun Nie Proceedings of The Web Conference (WWW) 2026. We propose OpenDecoder, a new approach that leverages explicit evaluation of the retrieved information as quality indicator features for generation. We aim to build a RAG model that is more robust to varying levels of noisy context. Three types of explicit evaluation information are considered: relevance score, ranking score, and QPP (query performance prediction) score. |

| 2025 |

|

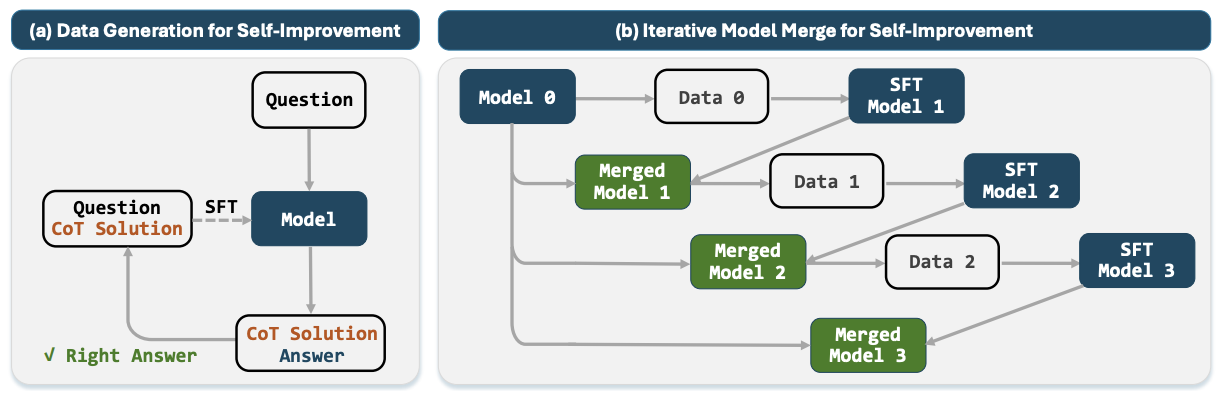

Xiangchi Yuan, Chunhui Zhang, Zheyuan Liu, Dachuan Shi, Soroush Vosoughi, Wenke Lee Proceedings of EMNLP 2025 Main. We propose Iterative Model Merging (IMM), a method that strategically combines weights from original and self-improved models to preserve generalization while incorporating genuine reasoning improvements. |

|

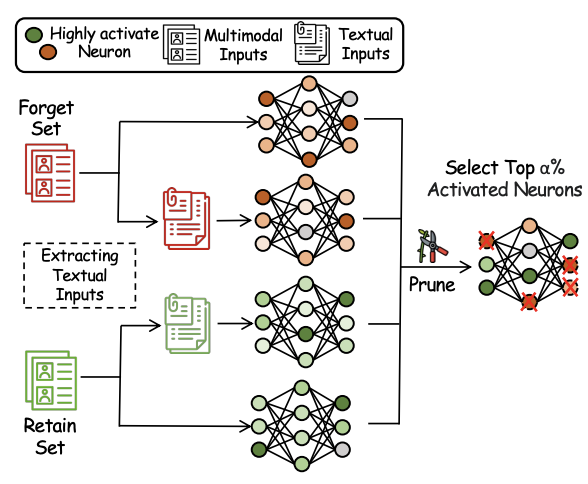

Zheyuan Liu, Guangyao Dou, Xiangchi Yuan,Chunhui Zhang, Zhaoxuan Tan, Meng Jiang Proceedings of ACL 2025 Main. We propose Modality Aware Neuron Unlearning (MANU), a novel unlearning framework for MLLMs designed to selectively clip neurons based on their relative importance to the targeted forget data, curated for different modalities. Specifically, MANU consists of two stages: important neuron selection and selective pruning. The first stage identifies and collects the most influential neurons across modalities relative to the targeted forget knowledge, while the second stage is dedicated to pruning those selected neurons. |

|

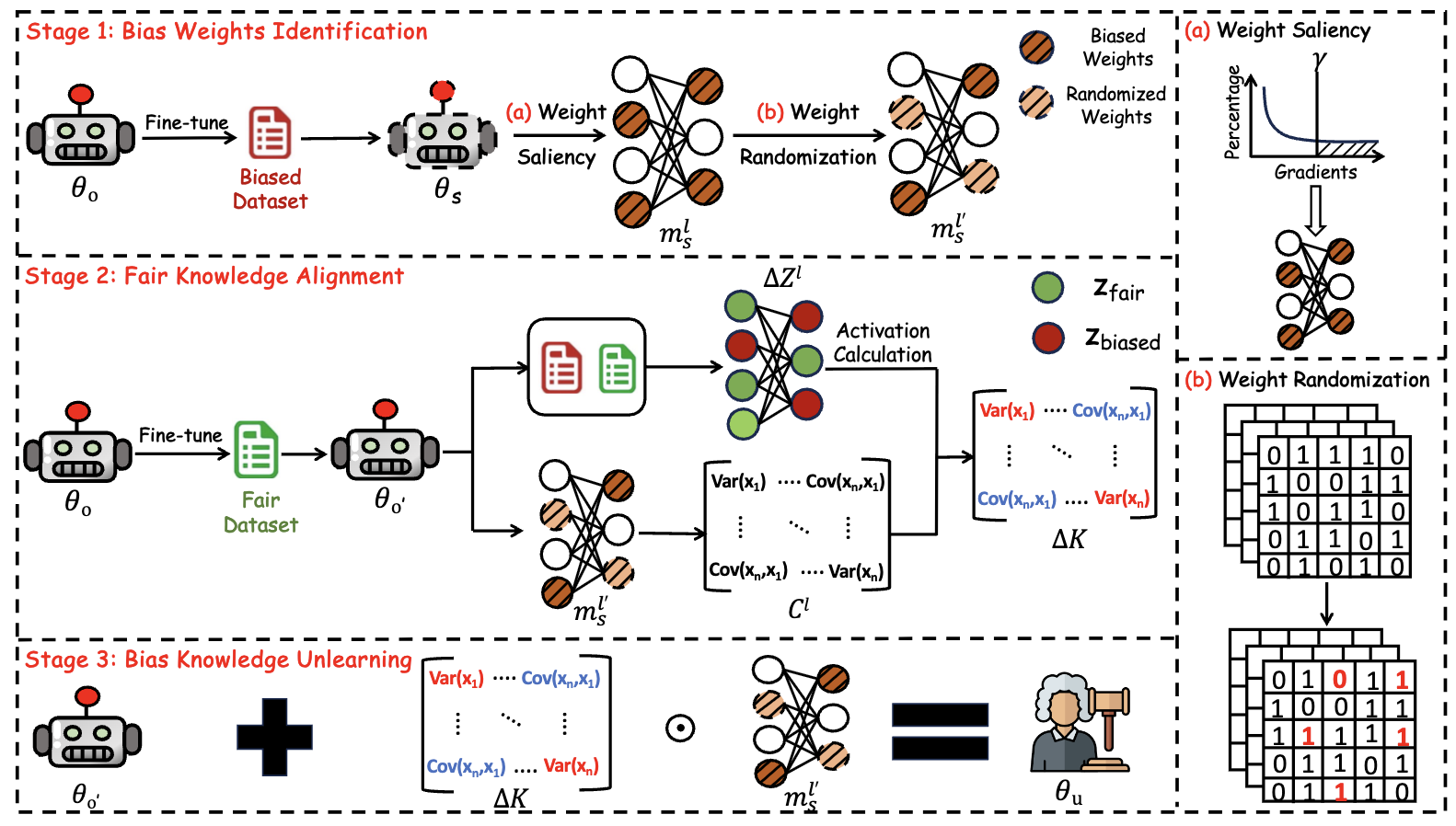

Zheyuan Liu, Suraj Maharjan, Fanyou Wu, Rahil Parikh, Belhassen Bayar, Srinivasan H. Sengamedu, Meng Jiang Proceedings of ACL 2025 Main (Oral, Top 8%). US Patent We propose Selective Disentanglement Unlearning (SDU), a novel unlearning framework that selectively removes biased knowledge while preserving reasoning capabilities. SDU operates in three stages: identifying biased parameters using a shadow LLM, fine-tuning with unbiased data, and performing selective parameter updates based on weight saliency. |

|

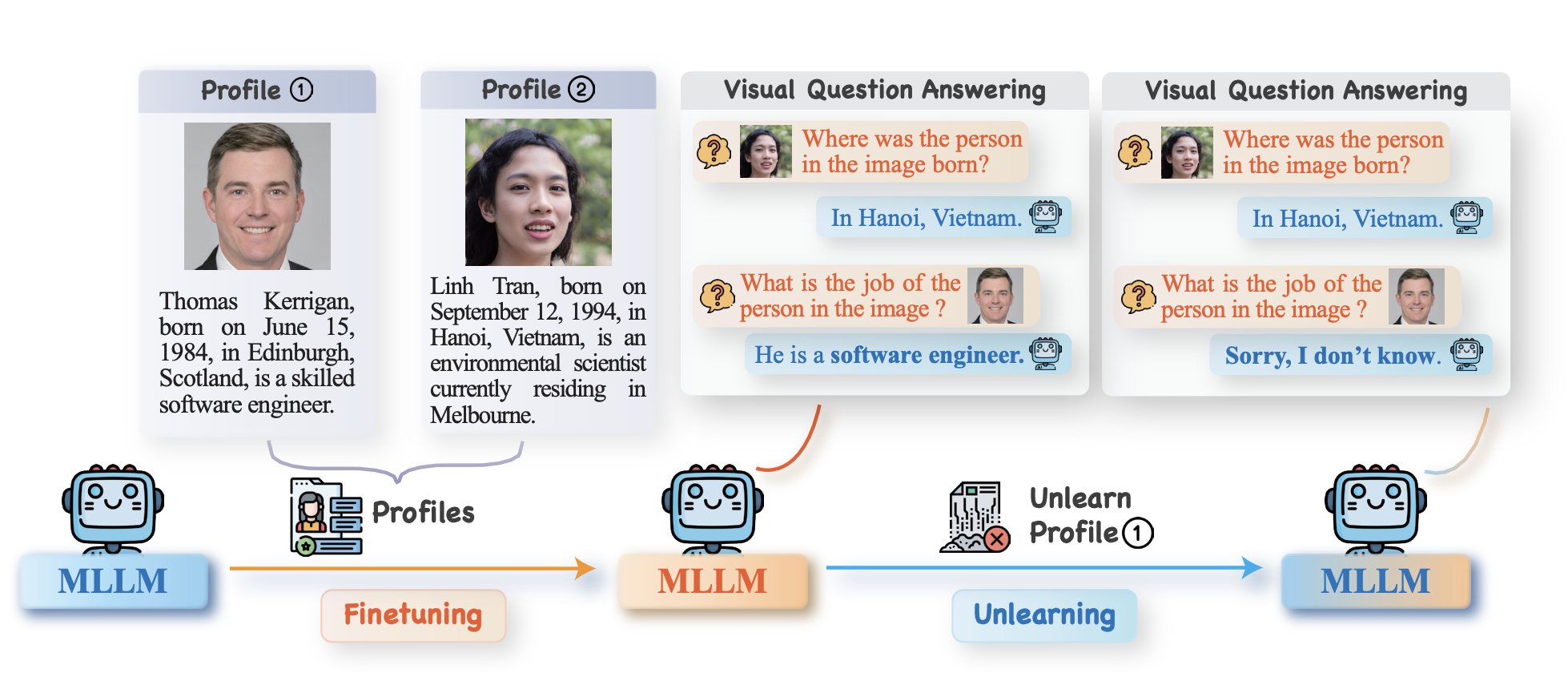

Zheyuan Liu, Guangyao Dou, Mengzhao Jia, Zhaoxuan Tan, Qingkai Zeng, Yongle Yuan, Meng Jiang Proceedings of NAACL 2025 Main (Oral). We introduce Multimodal Large Language Model Unlearning Benchmark (MLLMU-Bench), a novel benchmark aimed at advancing the understanding of multimodal machine unlearning. MLLMU-Bench consists of 500 fictitious profiles and 153 profiles for public celebrities, each profile feature over 14 customized question-answer pairs, evaluated from both multimodal (image+text) and unimodal (text) perspectives. |

|

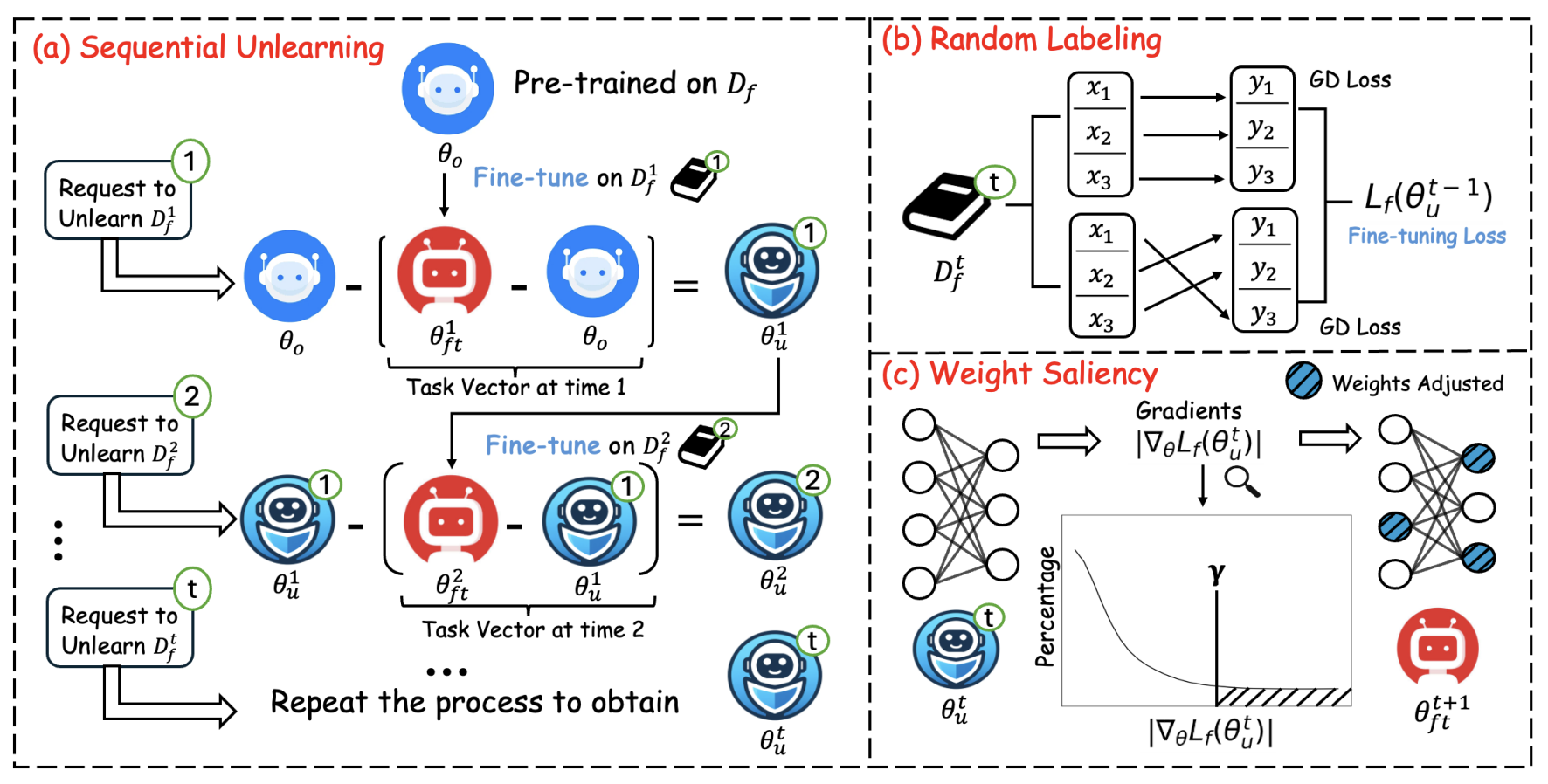

Guangyao Dou, Zheyuan Liu, Qing Lyu, Kaize Ding, Eric Wong Proceedings of NAACL 2025 (Findings). We propose Stable Sequential Unlearning (SSU), a novel framework designed to unlearn copyrighted content from LLMs over multiple time steps. Our approach works by identifying and removing specific weight updates in the model's parameters that correspond to copyrighted content. We improve unlearning efficacy by introducing random labeling loss and ensuring the model retains its general-purpose knowledge by adjusting targeted parameters. |

| 2024 |

|

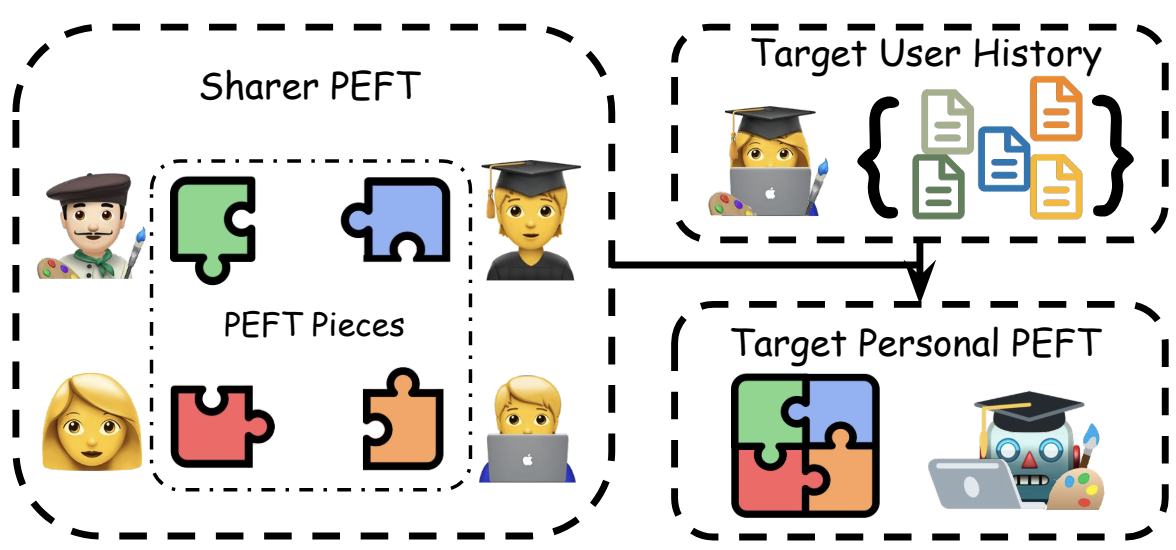

Zhaoxuan Tan, Zheyuan Liu, Meng Jiang Proceedings of EMNLP 2024. We proposed Personalized Pieces (Per-Pcs) for personalizing large language models, where users can safely share and assemble personalized PEFT modules efficiently through collaborative efforts. Per-Pcs outperforms non-personalized and PEFT retrieval baselines, offering performance comparable to OPPU with significantly lower resource use, promoting safe sharing and making LLM personalization more efficient, effective, and widely accessible. |

|

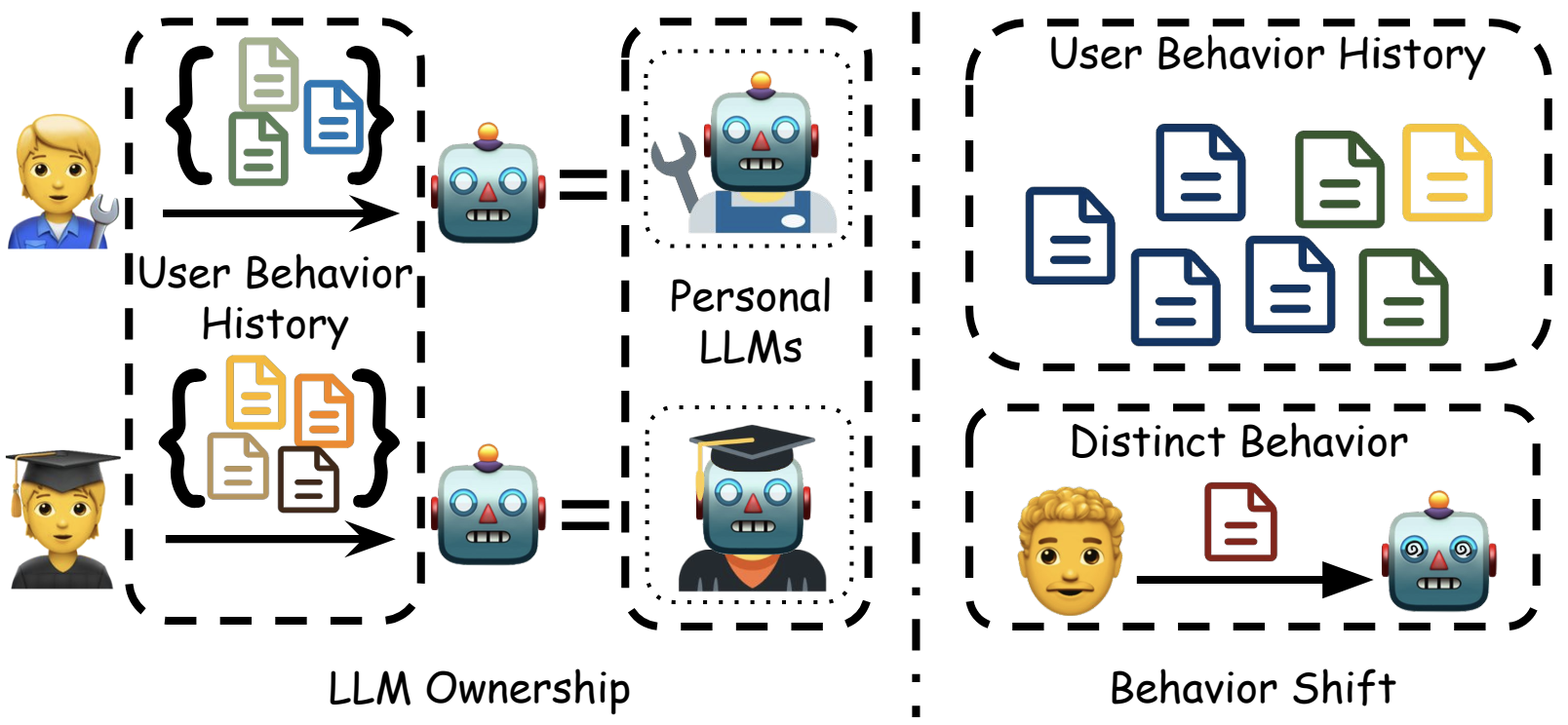

Zhaoxuan Tan, Qingkai Zeng, Yijun Tian, Zheyuan Liu, Bing Yin, Meng Jiang Proceedings of EMNLP 2024. We proposed One PEFT Per User (OPPU) for personalizing large language models, where each user is equipped a personal PEFT module that can be plugged in base LLM to obtain their personal LLM. OPPU exhibits model ownership and enhanced generalization in capturing user behavior patterns compared to existing prompt-based LLM personalization methods. |

|

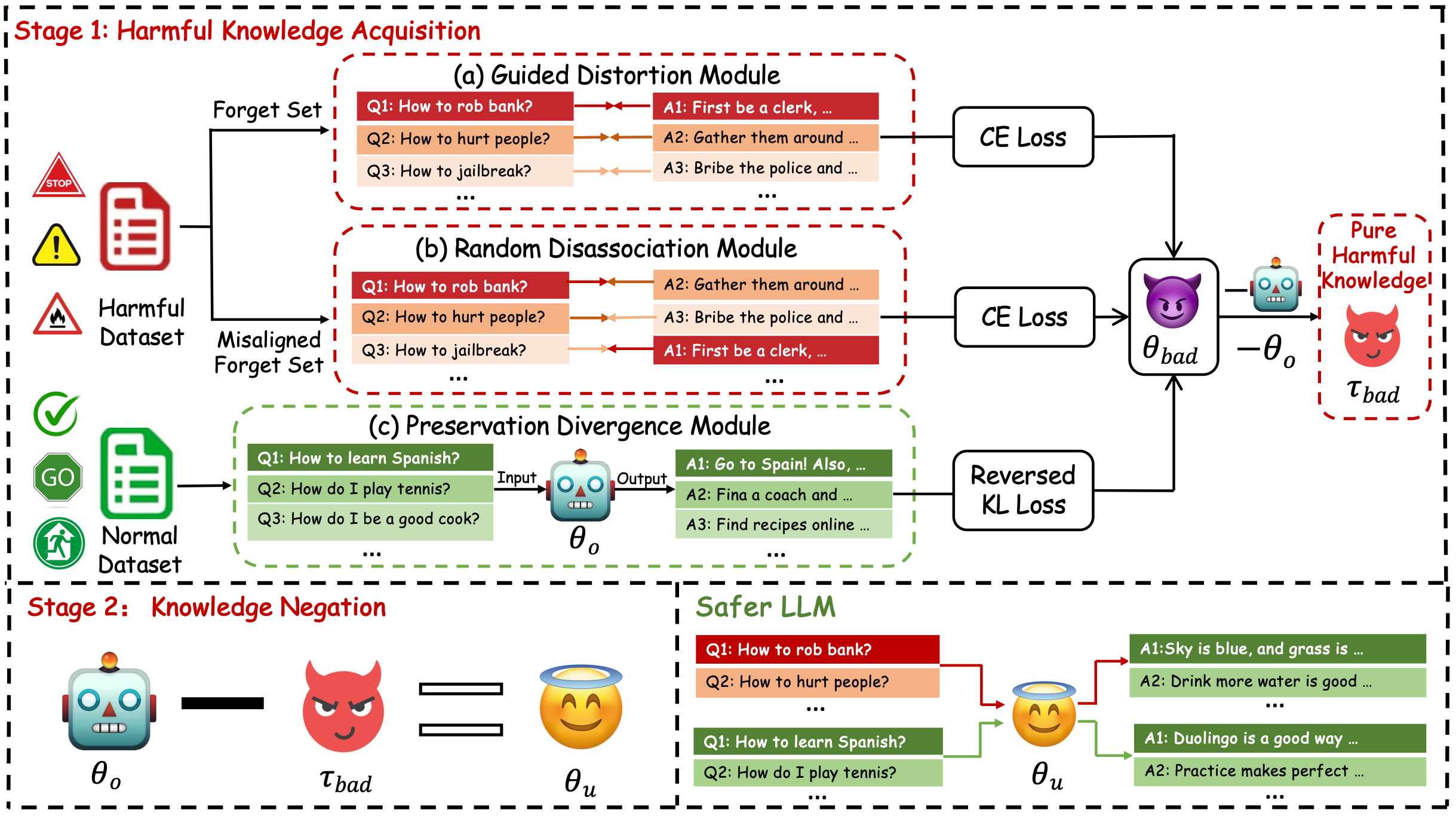

Zheyuan Liu, Guangyao Dou, Zhaoxuan Tan, Yijun Tian, Meng Jiang Proceedings of ACL (Findings), 2024. we introduce Selective Knowledge negation Unlearning (SKU), a novel unlearning framework for LLMs, designed to eliminate harmful knowledge while preserving utility on normal prompts. |

|

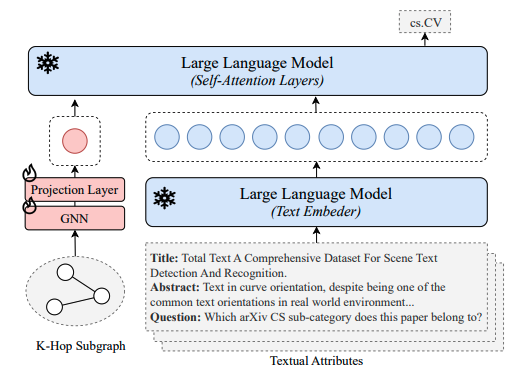

Zheyuan Liu*, Xiaoxin He*, Yijun Tian*, Nitesh V. Chawla Proceedings of The Web Conference (WWW) (Short Paper Track), 2024. we introduce GraphPrompter, a novel framework designed to align graph information with LLMs via soft prompts. |

|

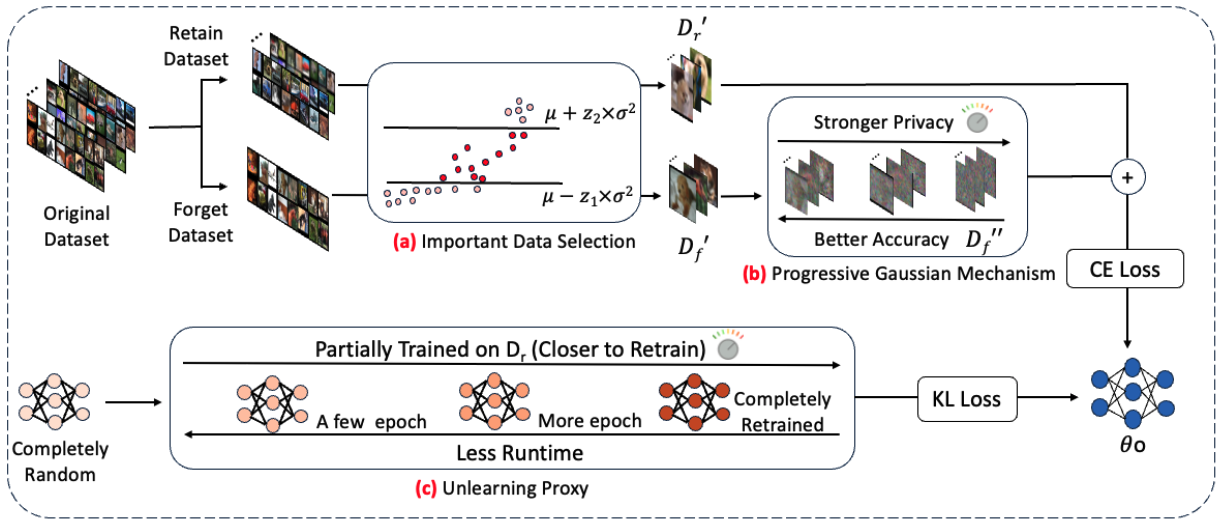

Zheyuan Liu*, Guangyao Dou*, Yijun Tian, Chunhui Zhang, Eli Chien, Ziwei Zhu Proceedings of The Web Conference (WWW), 2024. We present Controllable Machine Unlearning (ConMU), a novel framework designed to facilitate the calibration of MU. |

| 2023 |

|

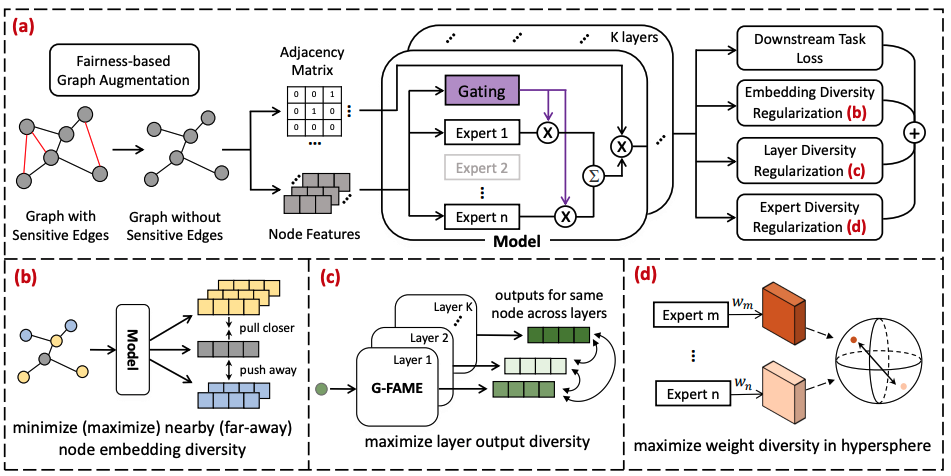

Zheyuan Liu*, Chunhui Zhang*, Yijun Tian, Erchi Zhang, Chao Huang, Fanny Ye, Chuxu Zhang Proceedings of The Web Conference (WWW), 2023. We develop Graph-Fairness Mixture of Experts (G-Fame), a novel plug-and-play method to assist any GNNs to learn distinguishable representations with unbiased attributes. |

| 2022 |

|

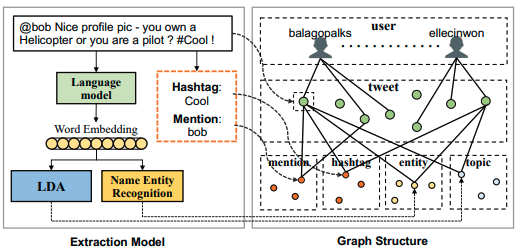

Jiele Wu, Chunhui Zhang, Zheyuan Liu, Erchi Zhang, Seven Wilson,Chuxu Zhang Proceedings of ICDM 2022. We first present a novel and a large-scale corpus of tweet data to benefit both graph-based and language-based malicious behavior detection research. |

|

|

|

Amazon Science

Palo Alto, CA, USA 2025.05 - Present Applied Scientist Intern @ Rufus Manager: Laurence (Yang) Li Mentor: Dr.Liqiang Xiao, Weixiang Yan |

|

Amazon Science

Seattle, WA, USA 2024.05 - 2024.08 Applied Scientist Intern @ PXTCS Manager: Dr. Belhassen Bayar Mentor: Dr. Suraj Maharjan Mentor: Rahil Parikh |

|

|

|

University of Notre Dame

South Bend, IN, USA 2023.08 - present Ph.D. in Computer Science and Engineering GPA: 3.92 / 4.00 Advisor: Prof. Meng Jiang |

|

Brandeis University

Waltham, MA, USA 2019.08 - 2023.05 B.S. in Computer Science B.S. in Applied Mathematics GPA: 3.87 / 4.00 |

|

|

|

|

Template courtesy: Jon Barron. |